Few-shot Algorithms for Consistent Neural decoding

FALCON aims to provide rigorous selection criteria for robust iBCI decoders, easing their translation to real-world devices.

Addressing real-world challenges

Stable iBCI decoding over weeks, with minimal data collected on new days.

Diverse datasets

Five curated datasets span movement and communication iBCI tasks in human and primates.

Flexible evaluation platform

One benchmark to compare few-shot, zero-shot, and test-time adaptive approaches.

Data-efficient decoding

FALCON evaluates iBCI decoders, comparing methods for consistent performance across sessions with minimal data, focusing on few-shot and zero-shot adaptation.

EvalAI leaderboard

Refine and test your iBCI decoders against the live leaderboard.

Follow us:

Join our mailing list for updates and check out our GitHub repository for code and documentation.

Overview

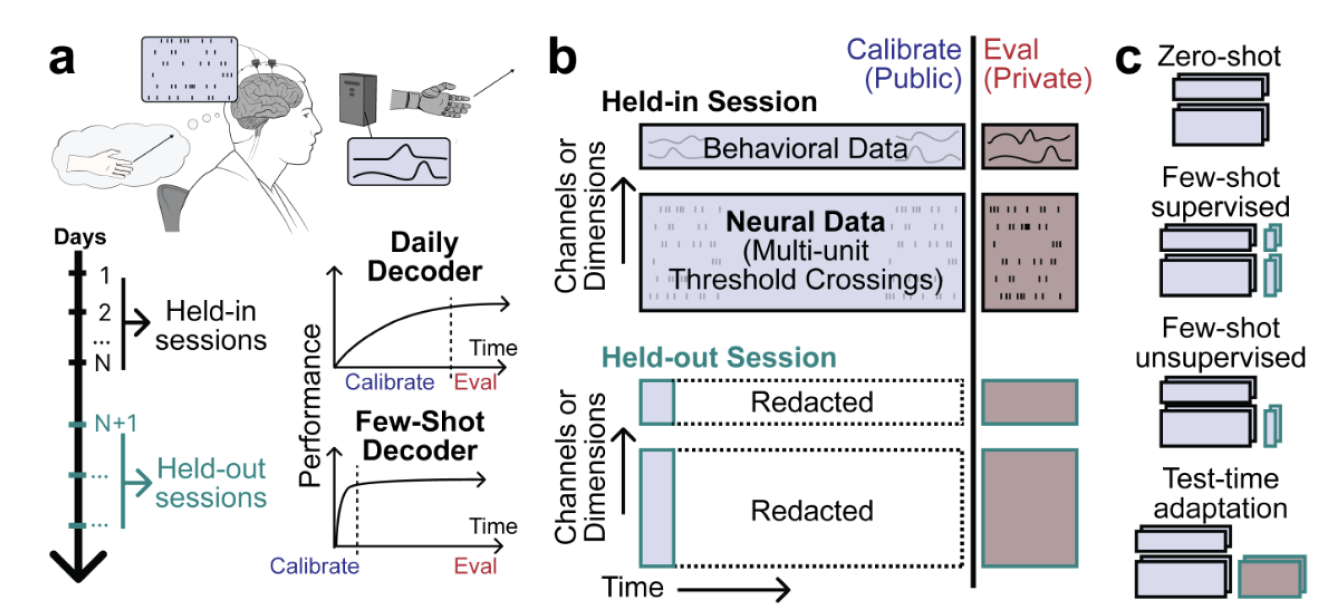

Decoding user intention from neural data provides a potential avenue to restore independence, communication, and mobility to individuals with paralysis. Spiking activity recorded with intracortical brain-computer interfaces (iBCIs) in particular provides high quality signals for enabling such control. However, the decoders built on intracortical spiking activity will often fail when used in real-world settings due to the non-stationarity of the neural signals. This non-stationarity can be caused by a variety of factors, including changes in the neural recording environment, changes in the user's behavior, and changes in the user's neural signals. At a holistic level, these changes result in inconsistent decoding performance across days. Restoring decoding performance often requires an inconvenient interruption of device use to perform a recalibration procedure, in which a user repeatedly performs a set of prescribed behaviors. Consequently, methods for adapting decoders to new days are critical for the real-world deployment of iBCIs.

We propose FALCON as a benchmark to standardize the evaluation of adaptation algorithms for iBCIs. For 5 different decoding tasks spanning the current use of iBCIs, FALCON provides paired neural and behavioral data collected over an early period of experiments and evaluates decoders on new days. In the iBCI literature, these paired data would be termed “calibration” data as they are used to train decoders. Since decoding on new days is ill-posed without any data, but calibration data on new days should be minimized, we frame decoding on new days as a few-shot adaptation problem, and provide a greatly reduced amount of calibration data on new days.

How do I submit a model to the benchmark?

We are hosting our challenge on EvalAI, a platform for evaluating machine learning models. On the platform, you can choose to make private or public submissions to any or all of the individual datasets.

Can I view the leaderboard without submitting?

Yes, the full leaderboard is available on EvalAI, and EvalAI is also synced with Papers With Code. Model open-sourcing is encouraged and thus may be available through the leaderboard.

Is there a deadline?

The benchmark and its leaderboard can be submitted to indefinitely on EvalAI as a resource for the community.

Citation

If you use the FALCON in your work, please cite our preprint.

Contact

The FALCON benchmark is being led by the Systems Neural Engineering Lab in collaboration with labs across several universities. General inquiries should be directed to [Dr. Pandarinath] at chethan [at] gatech [dot] edu.